When I was 12 years old, I started learning to program computers. But in those days, that meant writing FORTRAN code on paper, then using a punch cards machine to encode it, and sending the deck of punch cards to the computer center. I recently found one of my first programs. It was a piece of 11x14 green bar paper wrapped around a thin stack of 26 cards, with a rubber band around it. It was wrapped in exactly the same way I would have received it back from the System Operators on January 14, 1971, except it's a bit yellowed with age. The date printed on the paper is 71/014, the fourteenth day of 1971. That's how computer time was calculated, if you wanted to convert that to a calendar date, you needed another computer program.

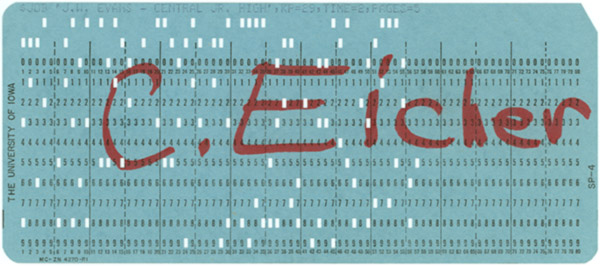

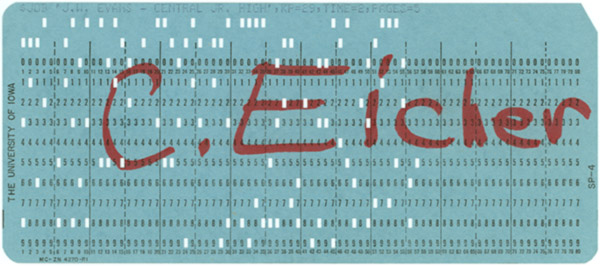

I unwrapped it and on top of the stack was my "separator card." Programs were punched on white cards with a blue card on the top of each program. That is my 12 year old handwriting.

I saved a 1.2Mb PDF of the whole printout, but I'll just show the important bits here. This is a really stupid program. But I suppose it's not so bad for a 12 year old kid in 1971. Nowadays any 12 year old kid can write something more complex on a personal computer and get instant results. But back then, it took a full day to send the cards in and get a printout back.

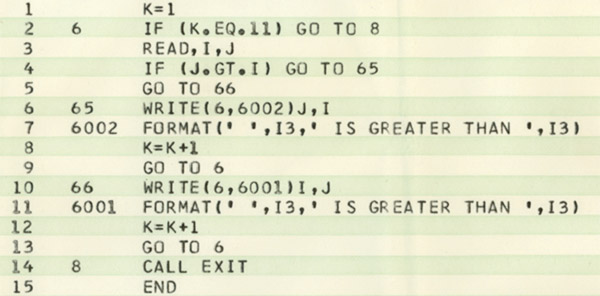

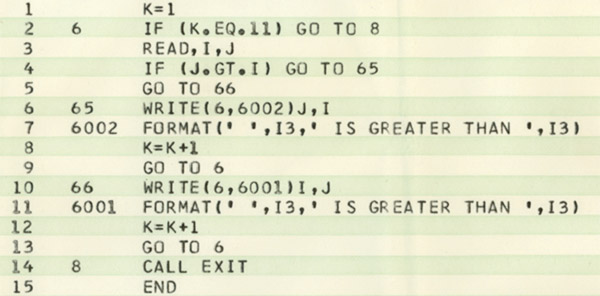

This must have been a class assignment to write a program that could compare two numbers punched on a card, and print which number was higher. But there are some really stupid programming tricks here. I'll explain one and why it's stupid. The program is designed to read a data card, compute which number is higher, print the results, and repeat until all 10 cards are done. It starts by setting a counter K to 1. After it prints each answer, it increments the counter, K=K+1 and loops back to the beginning to see if it should stop. But this is a stupid way to do it. The program stops when K gets to 11, which means the 10th card has been read, don't read the 11th one, it isn't there. But any normal computer programmer would have started with K=0, and count to 10, not 11. And the test for K>10 should be at the end, not the beginning of the program.

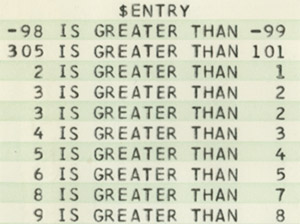

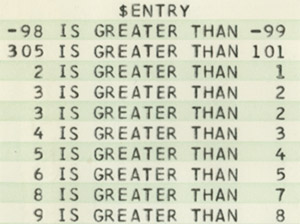

Now here are the results.

When I got this program back, I could not believe it. How could a computer possibly know which number was higher? I remember being baffled at my math teacher's explanation, you subtract the second number from the first, and if the result is negative, the second one is greater. If positive, the first one is greater. But then, how does the computer know how to subtract? And how does it know if the result is positive or negative? Um.. that's a little trickier. How do you know how to do that?

To me, the most interesting thing on this printout is at the bottom, where it lists the time the computer took to run this program. This was a huge IBM/360 mainframe costing millions of dollars. It took 0.13 seconds to compile the program, and 0.08 seconds to run. The blue card at the top of the program says to stop the program if it took more than 2 seconds to run. That would only happen if you got stuck in a loop and the program ran forever. Programs like that could stop a huge mainframe computer dead in its tracks, nothing would get done until that program was halted. I think I recall we got something like 2 seconds of computer runtime every semester, so one accident like that and you used up your whole semester's worth of computer time in one shot. You'd have to beg the System Operators for more time, and apologize for your stupidity at wasting a whole two seconds, almost an eternity in IBM/360 time.

I unwrapped it and on top of the stack was my "separator card." Programs were punched on white cards with a blue card on the top of each program. That is my 12 year old handwriting.

I saved a 1.2Mb PDF of the whole printout, but I'll just show the important bits here. This is a really stupid program. But I suppose it's not so bad for a 12 year old kid in 1971. Nowadays any 12 year old kid can write something more complex on a personal computer and get instant results. But back then, it took a full day to send the cards in and get a printout back.

This must have been a class assignment to write a program that could compare two numbers punched on a card, and print which number was higher. But there are some really stupid programming tricks here. I'll explain one and why it's stupid. The program is designed to read a data card, compute which number is higher, print the results, and repeat until all 10 cards are done. It starts by setting a counter K to 1. After it prints each answer, it increments the counter, K=K+1 and loops back to the beginning to see if it should stop. But this is a stupid way to do it. The program stops when K gets to 11, which means the 10th card has been read, don't read the 11th one, it isn't there. But any normal computer programmer would have started with K=0, and count to 10, not 11. And the test for K>10 should be at the end, not the beginning of the program.

Now here are the results.

When I got this program back, I could not believe it. How could a computer possibly know which number was higher? I remember being baffled at my math teacher's explanation, you subtract the second number from the first, and if the result is negative, the second one is greater. If positive, the first one is greater. But then, how does the computer know how to subtract? And how does it know if the result is positive or negative? Um.. that's a little trickier. How do you know how to do that?

To me, the most interesting thing on this printout is at the bottom, where it lists the time the computer took to run this program. This was a huge IBM/360 mainframe costing millions of dollars. It took 0.13 seconds to compile the program, and 0.08 seconds to run. The blue card at the top of the program says to stop the program if it took more than 2 seconds to run. That would only happen if you got stuck in a loop and the program ran forever. Programs like that could stop a huge mainframe computer dead in its tracks, nothing would get done until that program was halted. I think I recall we got something like 2 seconds of computer runtime every semester, so one accident like that and you used up your whole semester's worth of computer time in one shot. You'd have to beg the System Operators for more time, and apologize for your stupidity at wasting a whole two seconds, almost an eternity in IBM/360 time.

Similar experience with me starting in 1969 in high school where we used a programmable calculator (later I would learn exactly why it could not be called a true computer). That led to GE Timesharing and BASIC. At this time it was a true oddity for 14 year old kid to show up a university with a real computer lab carrying a letter of recommendation saying "This kid can program, help him learn until he graduates from high school." I have my high school teachers to thank for starting my epic career over 40 years ago.